这里是手动部署的过程~

安装 k8s 的官方控制台

执行

完成之后执行 kubectl get pods --namespace kubernetes-dashboard 查看 dashboard 运行状态

Running 说明已经正在运行中了, 但是如何访问呢?

执行

会在本地打开一个端口, 但是只能在这个命令运行的时候访问,这就很麻烦,

执行, 可以看到dashboard在集群内部, 外部是无法访问的

执行命令, 编辑这个容器的网络svc 配置

找到 spec->type, 修改为 NodePort, :wq 退出, 再执行kubectl -n kubernetes-dashboard get svc

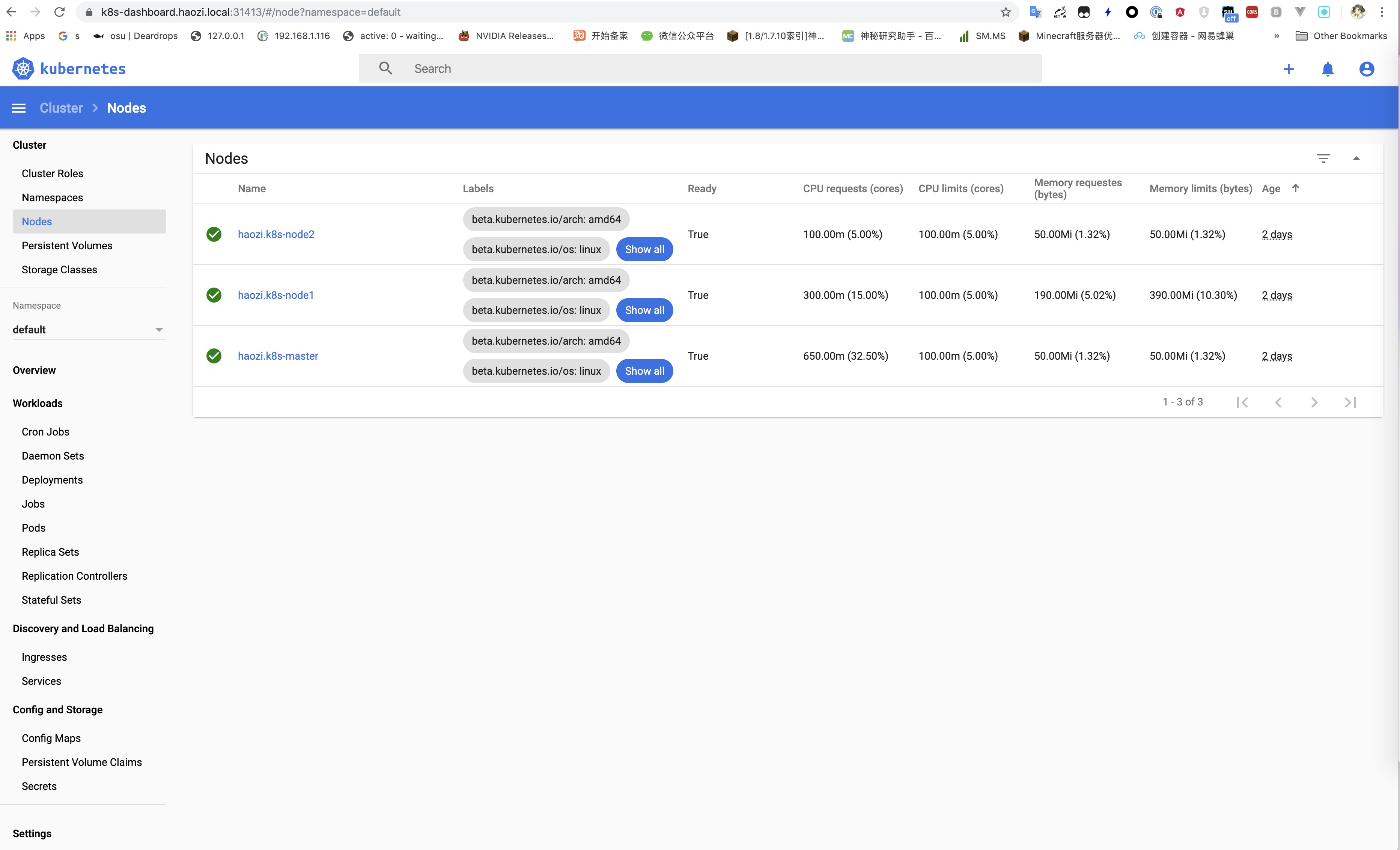

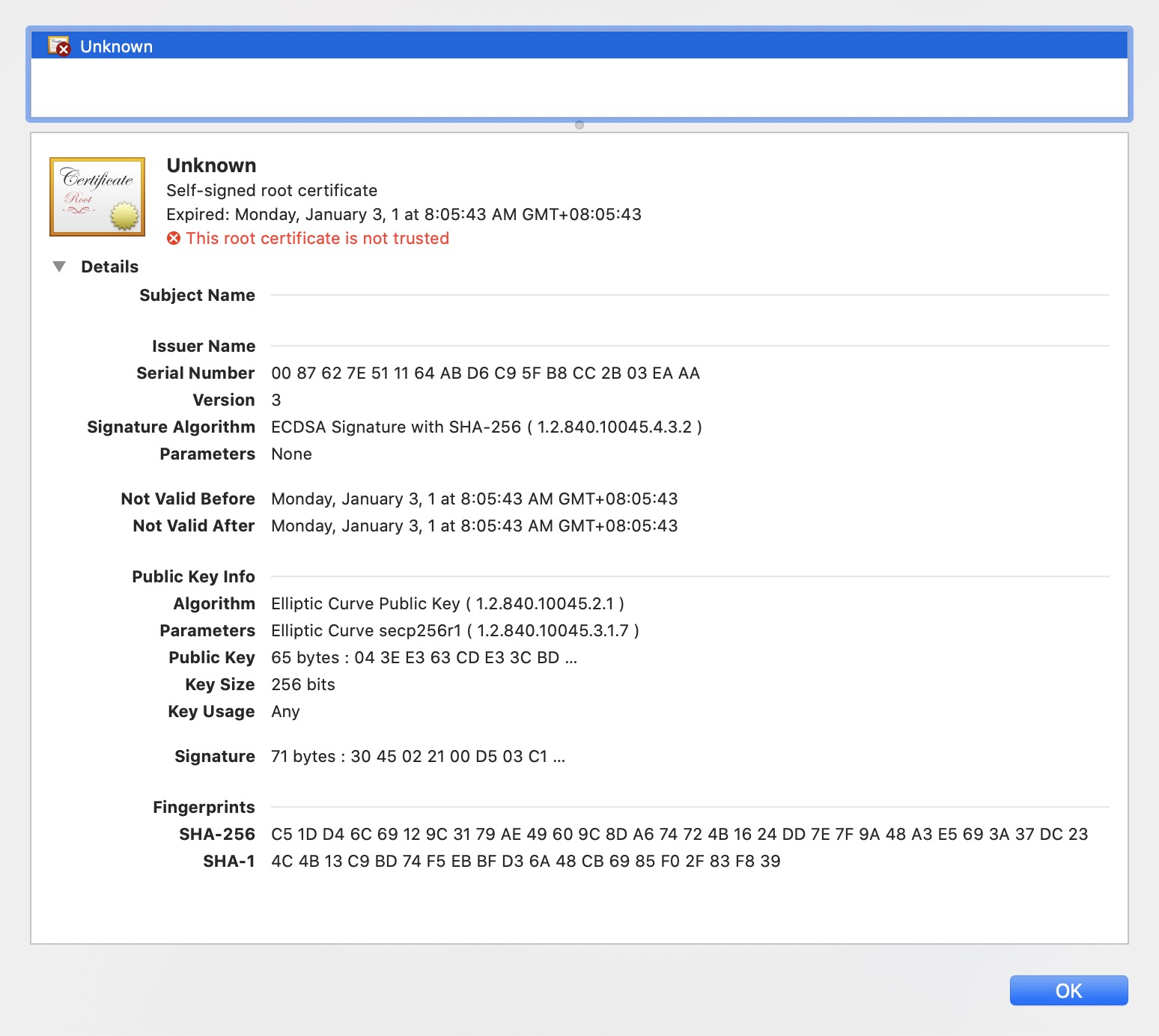

可以看到 kubernetes-dashboard 的 type已经修改为 NodePort, 分配的随机端口为 30522, 使用 MasterIP:30522 就可以访问了, 不过这个时候用 Chrome 打开的时候提示证书无效,

虽然这个错误可以通过一定的方式忽略, 但是我们尝试解决一下,给他分配一个正确的 ssl 证书了

获取 SSL 证书

参考 自建CA并签发证书 一文签发有效的 SSL 证书, 或者使用其他服务签发有效的 SSL 证书

应用证书

删除旧的 dashboard Pod

这里的 url 就是上面部署用的, 你个可以直接 wget 下来避免出问题

加载中.....

使用官网的 yml 创建 dashboard 的时候 namespace 为 kubernetes-dashboard, 刚刚我们删除了, 先重建一个 kubectl create namespace kubernetes-dashboard

创建 k8s secret, /usercert.pem 和 ./key.pem就分别是申请好的证书和证书的 Key

再运行安装第一步的安装命令进行安装, 再修改 NodePort为 NodePort 这里就可以正常访问了

登录

Token

可以参考 kubernetes-Authenticating 以及 Creating sample user 创建有权限控制的用户, 这里应为是内网就比较懒了

创建管理员

在 kube-system 里面创建一个 admin-user

创建角色绑定

使用 kubeadm创建的 k8s 集群,ClusterRole,admin-Role已经存在, 只需要创建 ClusterRoleBinding和ServiceAccount的绑定

分别执行上面的两个 yml , kubectl apple -f xxxx.yml

获取 token

执行这个

复制下面的 token 到 dashboard 的 token 登录

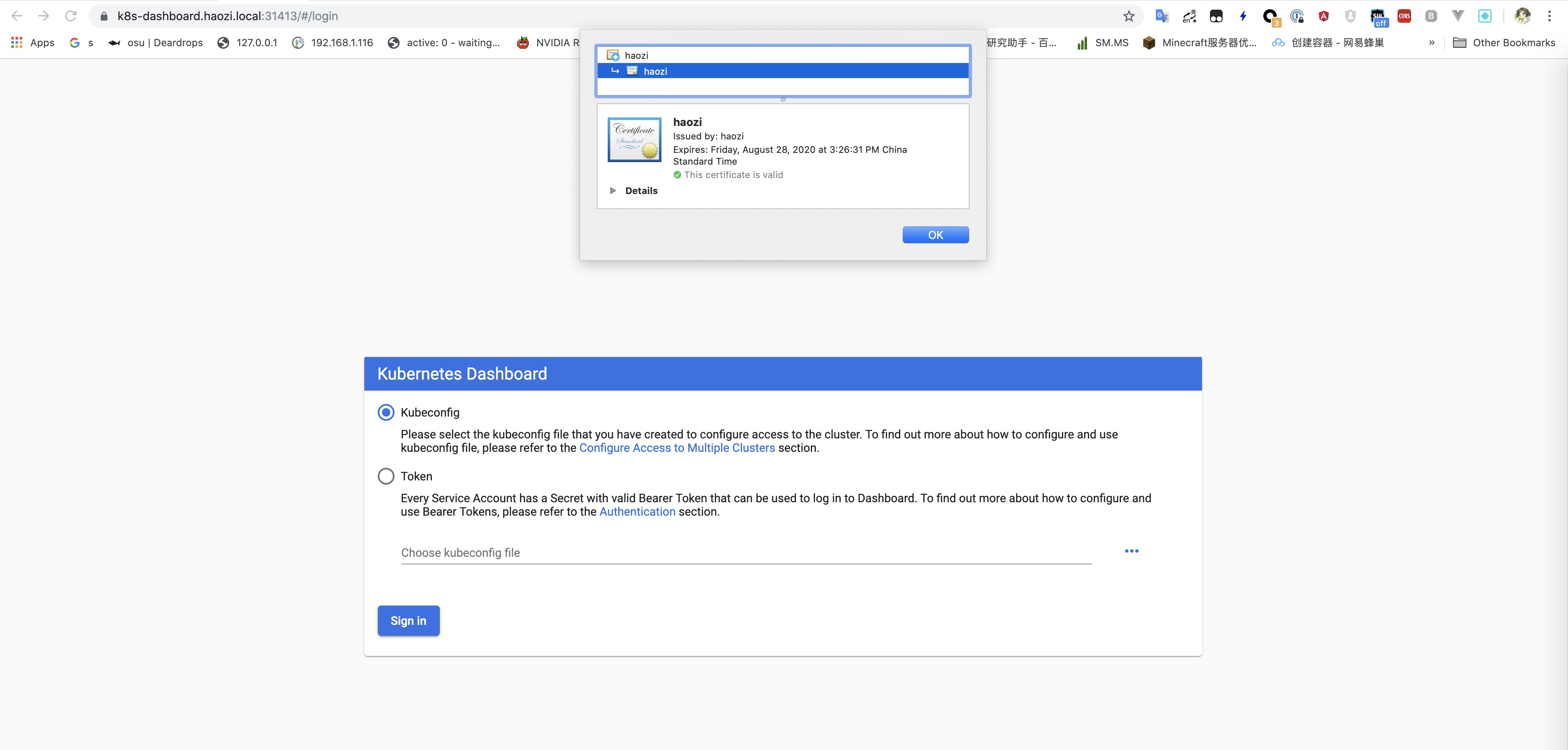

Kubeconfg

这个比较简单, 直接把 kubectl 的 config 拷贝出来, 将上面生成的 token放置在 users -> user-> token

然后在登录页面上传本配置即可